Ver este articulo en Español

Ver este articulo en Español

Index Key compression, evil or good?I'm going to split this post in two sections, the first one will depict what goes on inside the index when we use key compression, in order to get details of this feature inner nature and establish tendency to good or bad; the second part will provide tools to measure index key compression effects.

What happens inside the index?

I've been working with indexes big enough to save gigabytes of space, unfortunately those are not good academic examples of what is going. Therefore I will show this through a small POC (Proof Of Concept), which is a reduced and very controlled test case.

First we setup a table and one index on that table.

SQL> create table scott.t2 ( c1 varchar2(10), c2 varchar2(15), c3 number);

Table created.

SQL> create index scott.indx2 on scott.t2 ( c1, c2 );

Index created.

Then we insert some test records that provide the "repeatability" nature of data required by index compression.

SQL> insert into scott.t2 (c1,c2,c3) values ('America','Brasil',1);

insert into scott.t2 (c1,c2,c3) values ('America','Colombia',2);

insert into scott.t2 (c1,c2,c3) values ('America','Canada',3);

insert into scott.t2 (c1,c2,c3) values ('America','Costa Rica',4);

insert into scott.t2 (c1,c2,c3) values ('America','Ecuador',5);

insert into scott.t2 (c1,c2,c3) values ('America','Dominica',6);

insert into scott.t2 (c1,c2,c3) values ('America','Dominicana',7);

insert into scott.t2 (c1,c2,c3) values ('America','Peru',8);

insert into scott.t2 (c1,c2,c3) values ('America','Chile',9);

insert into scott.t2 (c1,c2,c3) values ('America','Venezuela',10);

insert into scott.t2 (c1,c2,c3) values ('America','Paraguay',11);

insert into scott.t2 (c1,c2,c3) values ('America','Uruguay',12);

insert into scott.t2 (c1,c2,c3) values ('America','Guatemala',13);

insert into scott.t2 (c1,c2,c3) values ('America','Nicaragua',14);

insert into scott.t2 (c1,c2,c3) values ('America','Bolivia',15);

insert into scott.t2 (c1,c2,c3) values ('America','Suriname',16);

insert into scott.t2 (c1,c2,c3) values ('America','Panama',17);

insert into scott.t2 (c1,c2,c3) values ('America','El Salvador',18);

insert into scott.t2 (c1,c2,c3) values ('America','Honduras',19);

commit;

You may note that "America" is a common value for every record, that establish the following premise:

index scott.indx2 may be compressed on the first column c1Now lets see how it's stored our information inside the index blocks, we will need a couple of tools here: a script that shows which blocks belong to our index and a sentence to dump the content block to readable format.

With this script you may be able to see what datafile and block points the offset or displacement desired for a given object.

SELECT

header_file,

header_block + &disp header_block

FROM dba_segments

WHERE

owner = UPPER('&owner')

AND segment_name = UPPER('&object');

When you run it at the sqlplus prompt, will give you a sequence like this:

Enter value for disp: 0

old 2: header_file, header_block + &disp header_block

new 2: header_file, header_block + 0 header_block

Enter value for owner: scott

old 6: owner = UPPER('&owner')

new 6: owner = UPPER('scott')

Enter value for object: indx2

old 7: and segment_name = UPPER('&object')

new 7: and segment_name = UPPER('indx2')

HEADER_FILE HEADER_BLOCK

----------- ------------

4 8859

Now that you have exactly which datafile and which starting block you want to dump, it's time to pass the above values to a range ALTER SYSTEM DUMP statement like this:

ALTER SYSTEM DUMP DATAFILE 4 BLOCK min 8859 block max 8866;

That will generate an ascii text dump file at the location pointed by USER_DUMP_DEST parameter (use

show parameter USER_DUMP_DEST to get present value).

Now within that file we can easily locate the index keys for the data we recently inserted, this is the content of offset block 0 (for this example, remember that results will depend on your own data).

B7D3CFD0 00000000 00000000 00000000 41070200 [...............A]

B7D3CFE0 6972656D 48086163 75646E6F 06736172 [merica.Honduras.]

B7D3CFF0 8F220001 02001200 656D4107 61636972 [.."......America]

B7D3D000 206C450B 766C6153 726F6461 22000106 [.El Salvador..."]

B7D3D010 0011008F 6D410702 63697265 61500661 [......America.Pa]

B7D3D020 616D616E 22000106 0010008F 6D410702 [nama..."......Am]

B7D3D030 63697265 75530861 616E6972 0106656D [erica.Suriname..]

B7D3D040 008F2200 0702000F 72656D41 07616369 [."......America.]

B7D3D050 696C6F42 06616976 8F220001 02000E00 [Bolivia...".....]

B7D3D060 656D4107 61636972 63694E09 67617261 [.America.Nicarag]

B7D3D070 01066175 008F2200 0702000D 72656D41 [ua..."......Amer]

B7D3D080 09616369 74617547 6C616D65 00010661 [ica.Guatemala...]

B7D3D090 0C008F22 41070200 6972656D 55076163 ["......America.U]

B7D3D0A0 75677572 01067961 008F2200 0702000B [ruguay..."......]

B7D3D0B0 72656D41 08616369 61726150 79617567 [America.Paraguay]

B7D3D0C0 22000106 000A008F 6D410702 63697265 [..."......Americ]

B7D3D0D0 65560961 757A656E 06616C65 8F220001 [a.Venezuela...".]

B7D3D0E0 02000900 656D4107 61636972 69684305 [.....America.Chi]

B7D3D0F0 0106656C 008F2200 07020008 72656D41 [le..."......Amer]

B7D3D100 04616369 75726550 22000106 0007008F [ica.Peru..."....]

B7D3D110 6D410702 63697265 6F440A61 696E696D [..America.Domini]

B7D3D120 616E6163 22000106 0006008F 6D410702 [cana..."......Am]

B7D3D130 63697265 6F440861 696E696D 01066163 [erica.Dominica..]

B7D3D140 008F2200 07020005 72656D41 07616369 [."......America.]

B7D3D150 61756345 06726F64 8F220001 02000400 [Ecuador...".....]

B7D3D160 656D4107 61636972 736F430A 52206174 [.America.Costa R]

B7D3D170 06616369 8F220001 02000300 656D4107 [ica..."......Ame]

B7D3D180 61636972 6E614306 06616461 8F220001 [rica.Canada...".]

B7D3D190 02000200 656D4107 61636972 6C6F4308 [.....America.Col]

B7D3D1A0 69626D6F 00010661 01008F22 41070200 [ombia..."......A]

B7D3D1B0 6972656D 42066163 69736172 0001066C [merica.Brasil...]

B7D3D1C0 00008F22 00000000 00000000 00000000 ["...............]

B7D3D1D0 00000000 00000000 00000000 00000000 [................]

Now it's time to see what happens when index key compression is applied to our index. Do you remember from previous posts the steps to determine the index rate, expected savings and issue the proper statement? It will look as follows:

SQL> analyze index scott.indx2 validate structure offline;

Index analyzed.

SQL> select opt_cmpr_count, opt_cmpr_pctsave from index_stats;

OPT_CMPR_COUNT OPT_CMPR_PCTSAVE

-------------- ----------------

1 26

SQL> alter index scott.indx2 rebuild compress 1;

Index altered.

Now that our index is compressed, let's see what happened at data block level. Before that, we need to determine datafile and block for the object, since the ALTER INDEX...REBUILD actually moves or changes the extents allocated to the index. Just run the script we used few paragraphs above and issue the ALTER SYSTEM DUMP with those new values.

Warning: if you are using the same session for all the exercise, the resulting dump operation will append to the trace file you already reviewed.The resulting dump will show us that "America" is no longer repeating on every index key but just once, let's see:

B7DB2060 00000000 00000000 00000000 6E655609 [.............Ven]

B7DB2070 65757A65 0106616C 008F2200 07000009 [ezuela..."......]

B7DB2080 67757255 06796175 8F220001 00000B00 [Uruguay...".....]

B7DB2090 72755308 6D616E69 00010665 0F008F22 [.Suriname..."...]

B7DB20A0 50040000 06757265 8F220001 00000700 [...Peru...".....]

B7DB20B0 72615008 61756761 00010679 0A008F22 [.Paraguay..."...]

B7DB20C0 50060000 6D616E61 00010661 10008F22 [...Panama..."...]

B7DB20D0 4E090000 72616369 61756761 22000106 [...Nicaragua..."]

B7DB20E0 000D008F 6F480800 7275646E 01067361 [......Honduras..]

B7DB20F0 008F2200 09000012 74617547 6C616D65 [."......Guatemal]

B7DB2100 00010661 0C008F22 450B0000 6153206C [a..."......El Sa]

B7DB2110 6461766C 0106726F 008F2200 07000011 [lvador..."......]

B7DB2120 61756345 06726F64 8F220001 00000400 [Ecuador...".....]

B7DB2130 6D6F440A 63696E69 06616E61 8F220001 [.Dominicana...".]

B7DB2140 00000600 6D6F4408 63696E69 00010661 [.....Dominica...]

B7DB2150 05008F22 430A0000 6174736F 63695220 ["......Costa Ric]

B7DB2160 00010661 03008F22 43080000 6D6F6C6F [a..."......Colom]

B7DB2170 06616962 8F220001 00000100 69684305 [bia..."......Chi]

B7DB2180 0106656C 008F2200 06000008 616E6143 [le..."......Cana]

B7DB2190 01066164 008F2200 06000002 73617242 [da..."......Bras]

B7DB21A0 01066C69 008F2200 07000000 696C6F42 [il..."......Boli]

B7DB21B0 06616976 8F220001 00080E00 656D4107 [via..."......Ame]

B7DB21C0 61636972 00000000 00000000 00000000 [rica............]

B7DB21D0 00000000 00000000 00000000 00000000 [................]

If you compare space usage between dumps, savings get near the 26% percent forecasted by the ANALYZE, and probes what Oracle promises in their documents about index key compression.

Measure index key compression gains...or losesThere are two approaches for this question:

1) Theoretical through estimations

2) Practical through testing

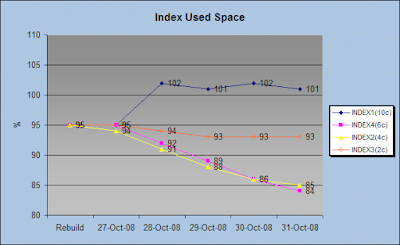

Estimations based on size reduction, are supported with results from ALL_INDEXES, DBA_SEGMENTS or INDEX_STATS. And it's recommended to take a snapshot before compression, and another after compression and then compare the number of total blocks, the number of branch blocks and leaf blocks among them.

Estimations based on execution plans, require that you collect all SQL that involves the table for the index in spot, take samples of execution plans before and after compression, and verify if the compressed index yields benefits or rises the cost. This task may seem tedious, however has the advantage that you will know better the queries that run over your database, even pinpoint current problems and suggest tuning performance solutions with greater returns than index key compression.

Practical testing or 'running tests' may better show the results, because it may consider activating trace, observe actual execution plans that may differ from those generated with EXPLAIN PLAN, sample precise statistics on index usage with queries on V$SEGMENT_STATISTICS, which is available since Oracle 9i.

Just remember, you must have a baseline to compare with; it may be some production sampling, or a test environment where you may compare before and after within the same database.

When is index key compression definitely

evil? right at the time the ALTER INDEX ... REBUILD is issued. Yes, the very act of rebuilding our index will reorganize it, so blocks will be filled with index keys upto the PCTUSED value, that may highly increase the probability of leaf splitting, which is an overhead we as DBAs must avoid... do you figure how to avoid this? if you have a clue, please leave a comment, let's interact and discuss this...

Don't miss the next issue, we are going to show examples of practical measurement.

View previous post:

Honey, I shrunk the indexes - Part 2 Subscribe to Oracle Database Disected by Email

Subscribe to Oracle Database Disected by Email

Ver este articulo en Español

Ver este articulo en Español